Project Description

AI Univeral Translator for Inclusive Government

===============================================

Our Mission

Our mission is to enhance civic life, driving democratic renewal and build a sense of belonging by fostering social inclusion.

We aim to empower all citizens—regardless of language, background, ability, or accessibility needs—to fully participate in society by improving their access to vital information and services.

Additionally, we strive to equip government staff at all levels—local, state, and federal—with AI-powered tools that serve as co-pilots, reducing administrative burdens and improving overall efficiency in delivering public services.

"We’re committed to increasing cultural participation, and helping businesses to innovate, adapt and grow."

-City of Sydney

“Australia is a prosperous, safe and united country. Our inclusive national identity is built around our shared values including democracy, freedom, equal opportunity and individual responsibility.”

-Department of Home Affairs

Our Approach

We are developing a universal translator, a trusted and personalised tool designed to bridge communication gaps between the government and the diverse population it serves. This tool will be especially impactful for individuals with disabilities, ensuring that communication barriers are removed and that they have equitable access to information.

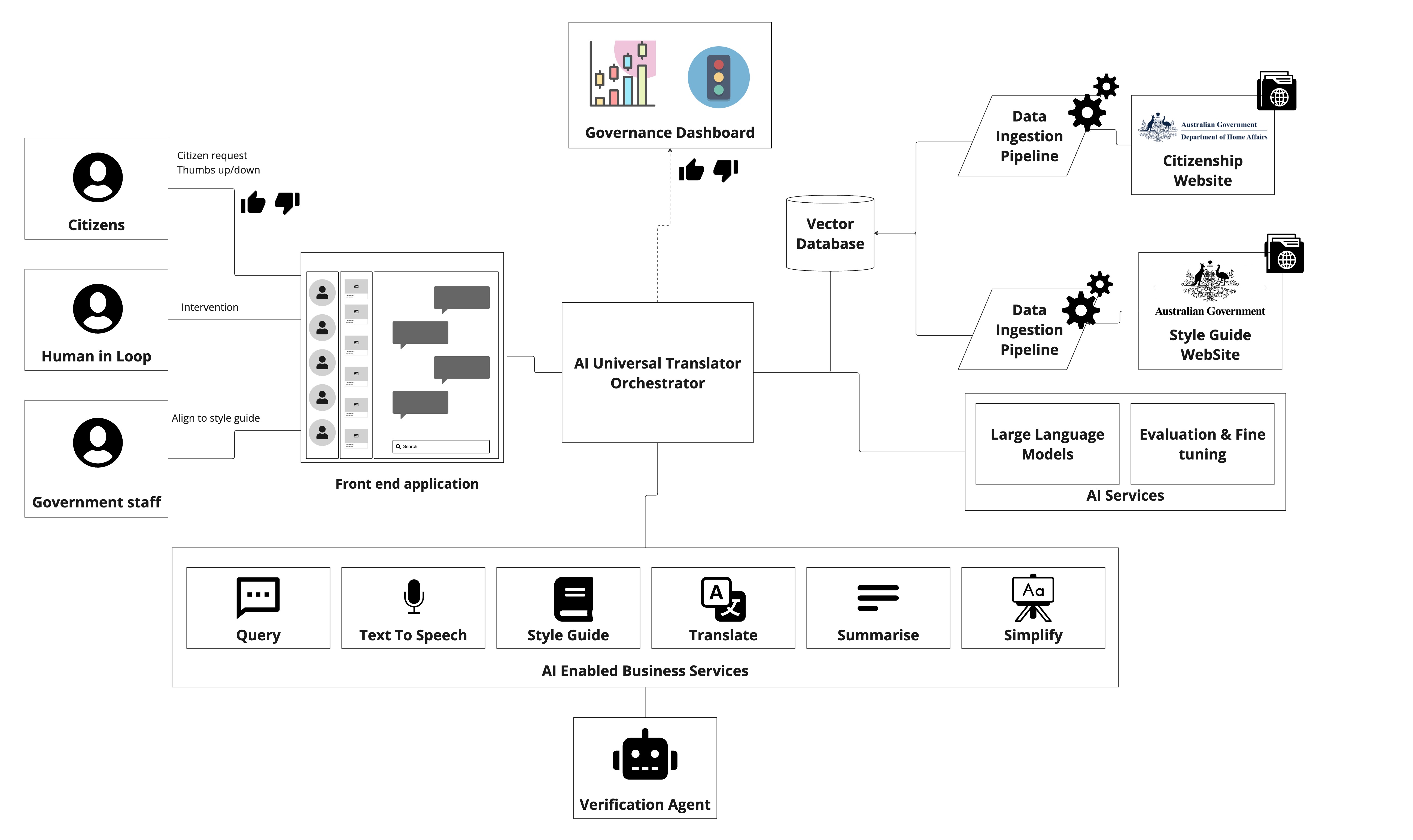

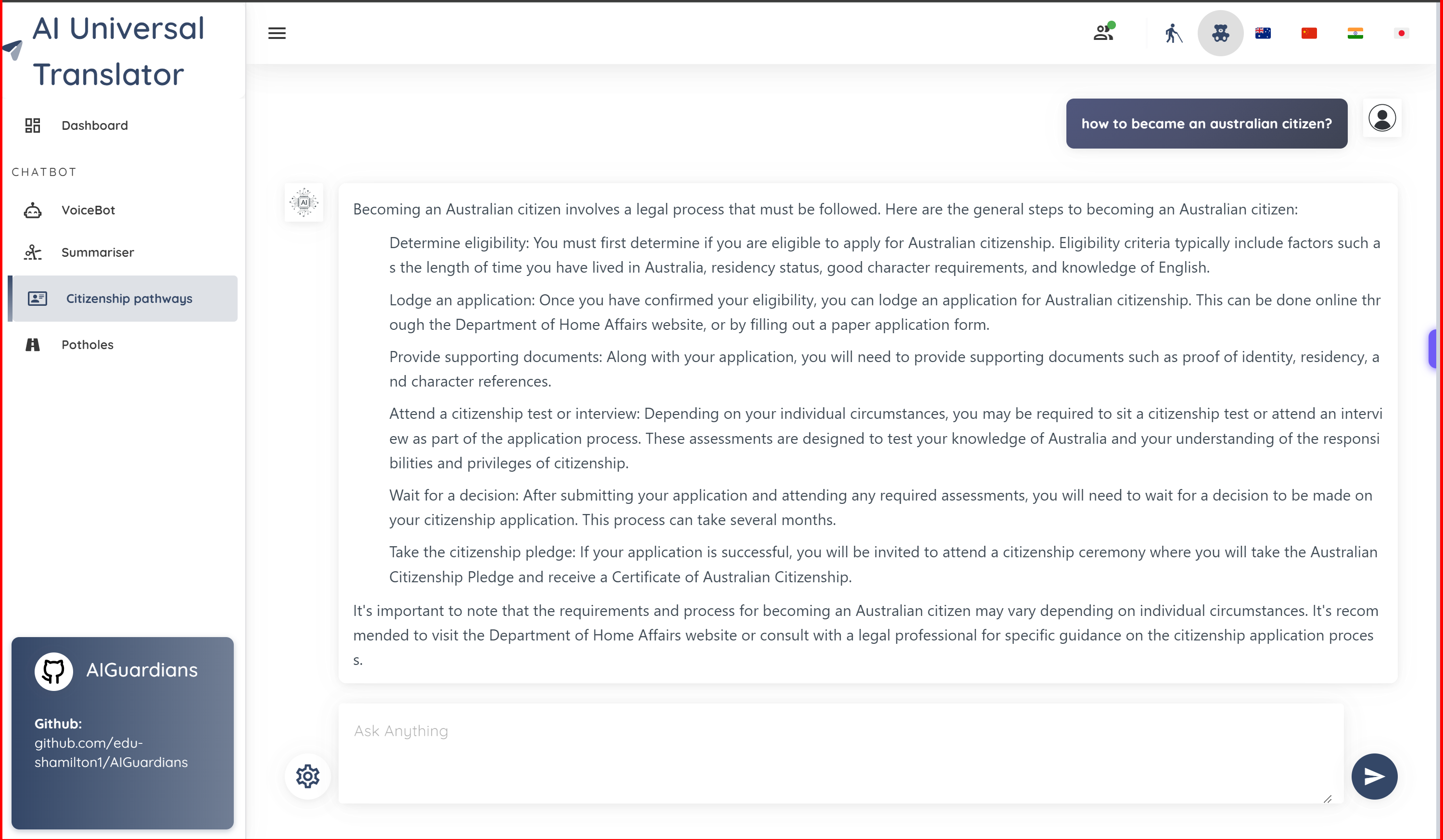

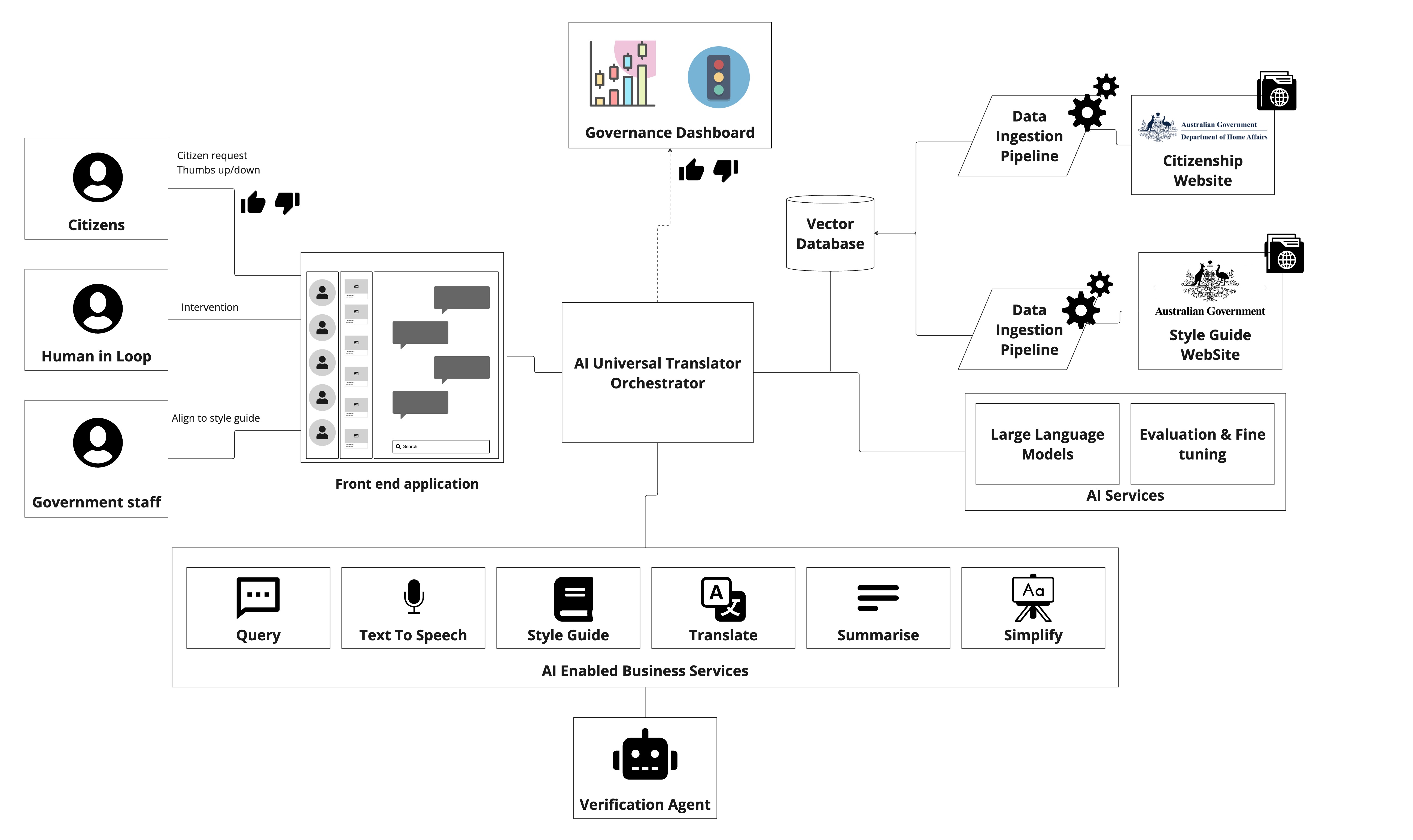

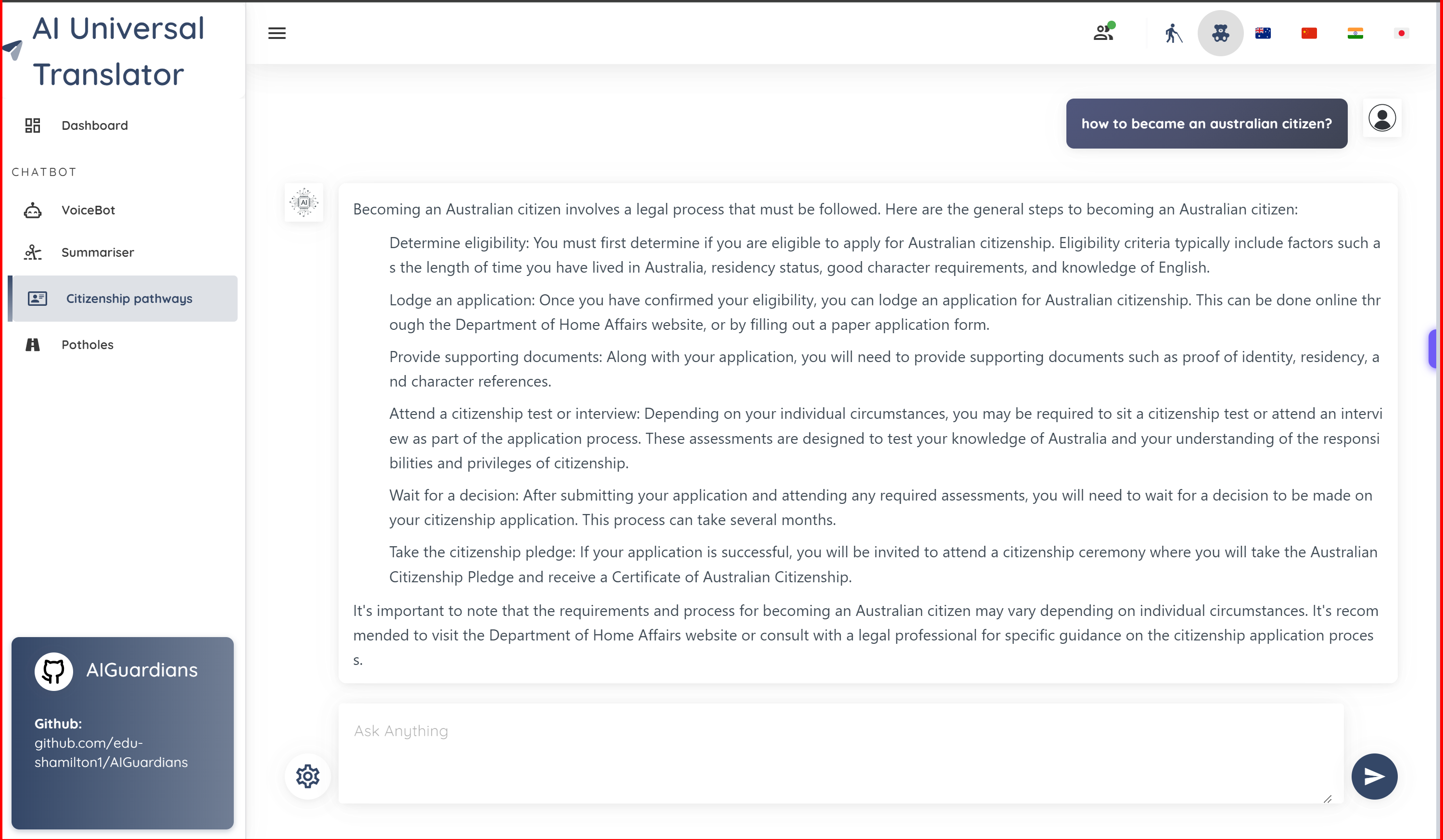

In our approach, we have developed a minimal viable project (MVP) or prototype showcasing a range of AI-powered services designed to form the foundation of a universal translator for inclusive government.

This translator adapts to multiple access channels, focusing on the ones most relevant to citizens, tailored to their context and accessibility needs.

Key features of our MVP include:

Citizenship queries: Responds to inquiries using data exclusively from citizenship websites.

Text-to-speech: Converts written content into speech for those who prefer or require auditory formats.

Summarization: Provides concise summaries of complex information.

Simplified language: Adjusts reading levels to ensure content is accessible to all literacy levels.

Language translation: Translates content into the user’s preferred language.

Government staff query (review): Reviews documents for plain language compliance.

Government staff query (translation): Translates official documents into plain, easily understandable language.

Autonomous verification: Uses autonomous agents to verify the accuracy of generated responses.

Transparency dashboard: Offers both citizens and government agencies a clear view of AI performance, ensuring transparency and accountability.

Use cases

Potential use cases for our universal translator could include include:

Non-English Speaker Engaging with Local Council

A resident who does not speak English can confidently communicate with their local council in their native language. They can ask questions, raise concerns, and receive accurate responses in real time, without needing a translator, ensuring privacy and direct communication.

Simplifying Complex Government Forms

Faced with lengthy, complicated government forms, a citizen uses the system to automatically simplify the documentation into plain, readable language. Key terms and sections are explained in an easy-to-understand manner, helping them navigate the process with confidence.

Accessibility for Low Literacy Levels

An individual with a low reading level uses the tool to automatically simplify a government document. The system breaks down complex language into simpler terms, making it easier for them to understand and engage with essential information.

Time-Poor Citizen Receiving Summaries

A busy citizen can quickly receive a summary of important government information or reports. Instead of sifting through detailed documents, the system provides them with concise, clear summaries of the key points, saving them time while keeping them informed.

Reporting Issues in Plain Language

A citizen who wants to report a pothole or other issue can easily do so using the tool, which allows them to describe the problem in plain English. The system captures their input and formats it appropriately for submission to the relevant government department, avoiding the need for complex forms.

Government Employee Ensuring Document Compliance

A government employee uses the system to review a document they’ve written, ensuring it aligns with the latest guidelines from the Australian Style Manual. The tool checks for adherence to style, tone, punctuation, and inclusive language, offering suggestions for improvement before final submission.

Data Source Verification

The system reviews incoming information from various sources (social media, public platforms, news outlets, etc.) and cross-references it with authoritative and trusted databases to check for accuracy and reliability.

Disinformation Detection Using AI techniques such as Retrieval-Augmented Generation (RAG), the system automatically checks whether the information aligns with known, verified facts. If discrepancies are detected, the system flags potential disinformation and provides a summary of conflicting information.

Increasing Volunteering Through AI-Driven Awareness | Volunteering rates are declining partly because people are unaware of local needs or find it difficult to understand how they can help. This use case focuses on making volunteer opportunities easier to understand and engage with.

Use of Artificial Intelligence

Responsible AI Integration

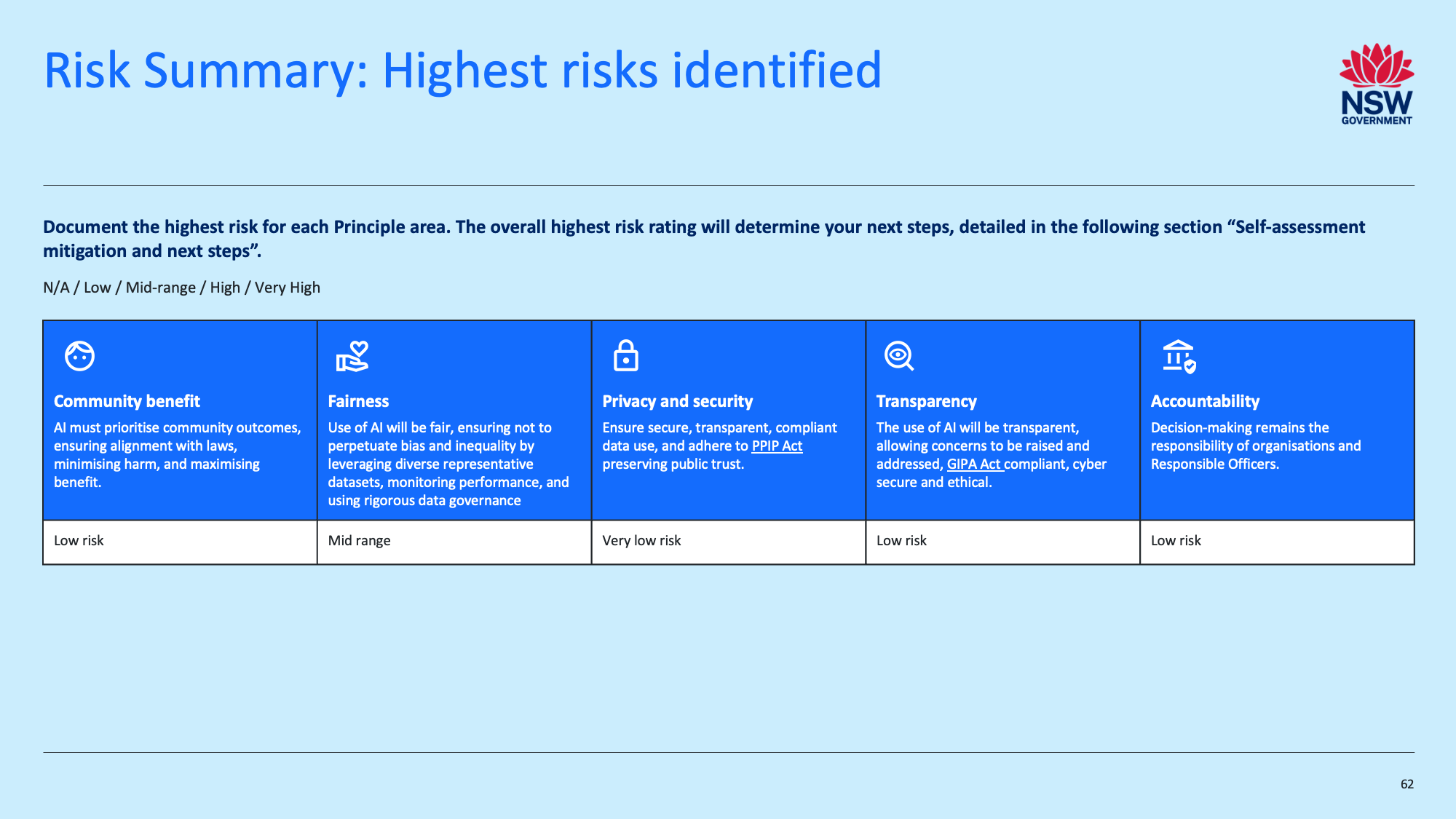

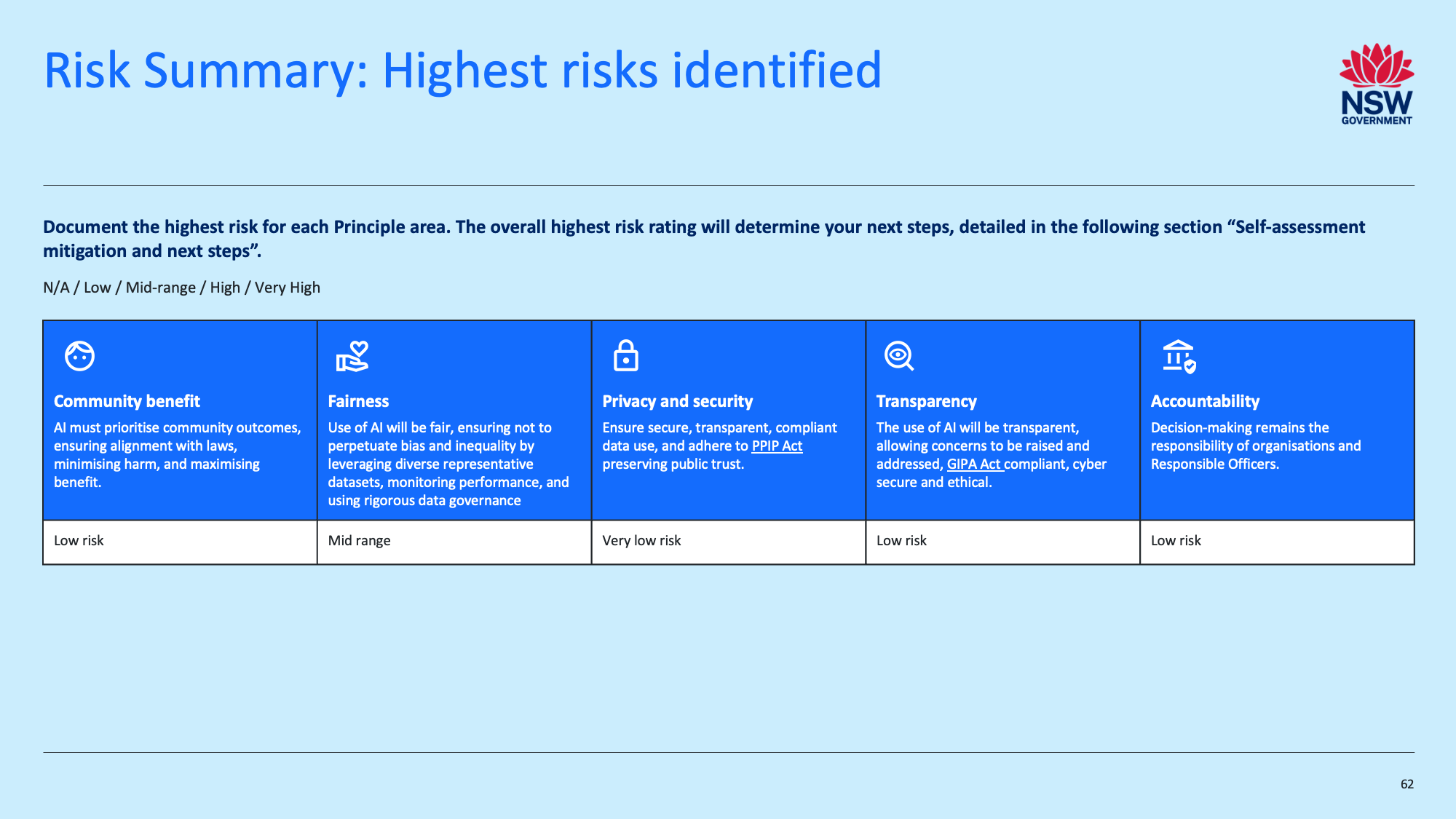

Recognizing the need for ethical, responsible, and safe AI, we are committed to transparency and public trust. We introduce a governance layer that oversees the AI orchestration engine, monitoring performance metrics related to accessibility, accuracy, and fairness.

To that end we completed a self assessment using the The NSW �AI Assessment Framework. This is an important asset for any project looking for use in government and should be completed in multiple stages of the project lifecycle, including at the initial concept stage.

CLICK ON IMAGE TO VIEW OR DOWNLOAD NSW AI ASSESSMENT FRAMEWORK RESPONSE

This allows both citizens and government agencies to track key outcomes and intervene if needed, ensuring the system remains trustworthy and inclusive for all.

Advanced AI Techniques

We achieve these goals using some state-of-the-art AI technologies, including:

- Multi-modal Large Language Models to handle text, speech, making services accessible to all, including those with disabilities.

- Retrieval-Augmented Generation (RAG) techniques to ensure that information is accurate and sourced from authoritative references.

- Agentic AI Models to validate query results before presenting them to users, ensuring responsible and accurate responses.

- Governance Dashboards aligned with the latest AI principles and accessibility frameworks, especially focused on providing transparency and accountability in service to all citizens, including those with disabilities.

Technology Stack

Our prototype is built with scalability, flexibility, and accessibility in mind:

- Python for backend processing.

- Vue.js, Vuetify, Vite for front end development

- Langchain and LangServ for managing language models and agent-based workflows.

- Large Language Models(LLM) for natural language understanding and generation.

- Agentic autonomous agents for validating outputs and ensuring ethical standards.

- Text-to-Speech for accessibility, offering auditory assistance to those who need it.

- Vector database Index and store embeddings from data ingestion pipelines

All components are deployed in Docker containers, using Docker Compose in a component based architecture. LLM API’s are invokable within microservices using LangServ to ensure easy scalability and modularity across different environments.

=====================================

AI In Governance - The Bigger Picture

=====================================

This section looks to answer some of the strategic questions posed by the Infosys International Challenge.

It addresses questions in the following areas:

- Boosting Operational Efficiency

- Improving Transparency

- Ensuring Ethical Use

- Data Privacy and Security

- Building Public Trust

- Future Adaptations

Boosting Operational Efficiency

In a large agency, scaling adoption commensurate with organisation maturity, risk appetite and experience of AI solutions will be key.

By following steps such as these, government agencies can strategically implement AI to enhance operational efficiency, optimize workflows, and reduce administrative overhead.

A challenge for councils, and smaller agenices will be CAPEX and ongoing OPEX for solutions, So partnering to share costs, assets and experiences may be part of considered adoption approach.

Understand Business Opportunities, Risks, and Rewards of Using AI:

* Conduct thorough analysis to identify high-impact areas for AI.

* Evaluate risks like data privacy, ethical concerns, and cybersecurity.

* Quantify rewards such as cost savings, time efficiency, and social benefits like improved social inclusion.

Create Lean Business Cases to Evaluate at High Level:

* Develop concise business cases focusing on high-level benefits and costs.

* Use these cases to secure executive buy-in and funding.

Put Through Governance Process:

* Implement a robust governance framework.

* Ensure compliance with state and federal regulations.

* Create policies for ethical AI use and risk management.

Led by Governance Executive Stakeholders:

* Engage executive stakeholders to champion AI initiatives and drive alignment.

* Foster cross-departmental collaboration for resource and expertise leverage.

Align with Practices of State and Federal Government:

* Ensure AI implementations align with state and federal guidelines.

* Adopt best practices and standards for interoperability and compliance.

Look to Partner for Underpinning Common Scaffolding Logic:

* Collaborate with technology partners and vendors.

* Leverage existing infrastructure to reduce costs and streamline implementation.

Use Data Science Projects to Ensure Ideas are Sound Before Significant Investment:

* Conduct pilot projects and proof-of-concepts to validate AI applications.

* Use data science methodologies to assess feasibility, scalability, and effectiveness.

Improving Transparency:

By strategically implementing AI and ensuring robust governance, government agencies can significantly enhance transparency, improve public trust, and deliver better services.

However, AI transparency is still an emerging field with the Australian government only publishing Ethical Principals for AI recently. NSW State Government AI Assessent Framework provides an excellent set of resources that may help guide agencies.

Provide Governance Dashboards to Inform on Transparency:

* Develop AI-powered dashboards for real-time insights into government operations.

* Make dashboards accessible to officials and the public to foster transparency.

Ensure that a View on Decision-Making and Consequences is Made:

* Use AI to track and document the decision-making process within agencies.

* Provide clear visibility into decision-making and its rationale.

Enable a Right of Reply and Complaint by Citizens:

* Implement AI-driven platforms for citizens to voice concerns and provide feedback.

* Monitor these platforms to ensure timely and transparent responses.

Ensure There are Accountable Parties:

* Assign specific roles and responsibilities for accountability.

* Use AI to monitor compliance and ensure accountable actions are taken.

Ensure Procurement Practices are Updated to Reflect FOAK Nature of AI:

* Update procurement policies for AI technologies, including first-of-a-kind (FOAK) solutions.

* Involve architecture, cybersecurity experts, and AI specialists in the procurement process for comprehensive evaluation and integration.

Ensuring Ethical Use:

To ensure the ethical use of AI applications in the public sector, a comprehensive set of frameworks and guidelines should be established.

These frameworks should encompass principles and practical steps to guarantee the responsible design, development, deployment, procurement, and use of AI technologies.

By implementing these frameworks and guidelines, public sector organisations can ensure that AI applications are used ethically, addressing concerns about algorithmic bias and fostering trust in AI technologies.

Here's a summary of key frameworks and guidelines that are in practice or emerging in Australia.

There are considerable efforts internationally as well as in open source initiatives.

NSW AI Assessment Framework (AIAF)

The NSW AI Assessment Framework (AIAF) provides a self-assessment tool for NSW Government agencies. It is designed to ensure that AI technologies are utilised responsibly by evaluating risks and promoting ethical considerations throughout the AI lifecycle. Agencies can use this framework to assess the potential impacts of their AI projects, ensuring ethical design, development, and deployment.

Australia’s AI Ethics Principles

Australia's AI Ethics Principles serve as a recent foundational guide to ensure AI systems are safe, secure, and reliable. These voluntary principles include:

* Human, societal, and environmental wellbeing

* Human-centred values

* Fairness

* Privacy protection and security

* Reliability and safety

* Transparency and explainability

* Contestability

* Accountability

Development of Key Performance Indicators (KPIs)

To operationalize the AI Ethics Principles, relevant KPIs should be developed.

- These KPIs will help measure adherence to ethical standards and ensure continuous improvement in AI practices.

- For instance, KPIs may track the fairness of AI decisions, the transparency of algorithms, and the protection of user data.

Addressing Algorithmic Bias

To tackle algorithmic bias and ensure fairness, the following measures can be implemented:

- Diverse Data Sets: Using diverse and representative data sets to train AI systems can mitigate biases.

- Bias Audits: Regular audits of AI systems to identify and correct biases.

Inclusive Design: Involving diverse teams in the design and development process to bring multiple perspectives and reduce biases.

Ensuring Transparency and Accountability

To promote transparency and accountability in AI systems:

- Explainable AI: Developing AI systems that provide clear and understandable explanations for their decisions. This may be a challenge when using commercial LLM’s which may be largely opaque.

- Documentation and Reporting: Maintaining detailed documentation of AI development processes and decision-making criteria.

- Regulatory Oversight: Establishing regulatory bodies to oversee AI deployments and ensure compliance with ethical standards.

Benchmarking and Standards

Utilising benchmark suites like those developed by MLCommons can help measure the performance and ethical compliance of AI systems. These benchmarks assess various aspects of AI models, ensuring they meet the required standards for fairness, reliability, and transparency.

Data Privacy and Security:

To protect the privacy and security of sensitive data used by AI in public sector applications, a multi-faceted approach involving robust frameworks, continuous monitoring, and proactive measures must be implemented.

By implementing these comprehensive measures, public sector organisations can effectively protect the privacy and security of sensitive data used in AI applications, ensuring that data protection practices do not compromise the effectiveness of AI-driven initiatives.

Below are some key areas and strategies to address these concerns effectively:

Adhering to OWASP Top 10 for Large Language Model Applications

The OWASP Top 10 provides critical guidelines for securing large language model (LLM) applications. Key measures include:

- Data Privacy and Security: Implement strong data encryption and access control mechanisms to protect sensitive data.

- Input Validation: Ensure that all inputs to AI models are validated to prevent injection attacks.

- Monitoring and Logging: Continuously monitor AI systems for unauthorised access and maintain logs for auditing purposes.

- Model Security: Secure the AI models themselves to prevent theft or tampering.

DevSecOps Practices

Integrating security into the development lifecycle (DevSecOps) ensures that security measures are considered at every stage of AI application development:

* Continuous Integration and Continuous Deployment (CI/CD): Incorporate automated security testing into CI/CD pipelines.

* Code Reviews and Audits: Regularly review code for security vulnerabilities and conduct thorough security audits.

* Security Training: Provide ongoing security training for developers to keep them updated on best practices.

Advanced Security Measures

Moving beyond traditional security frameworks like ISO27001, more proactive and interrogative measures should be adopted:

- Red Teaming: Conduct simulated attacks (red teaming) to identify vulnerabilities and improve defensive measures.

- Penetration Testing: Regularly perform cybersecurity penetration testing to discover and fix security gaps.

- Treat AI as First-of-a-Kind (FOAK): Handle AI projects with extra caution and due diligence until they become more established and standardised.

- Data Protection without Compromising Effectiveness

Governments can ensure data protection while maintaining the effectiveness of AI initiatives through the following strategies:

- Data Classification: Classify data based on its sensitivity and apply appropriate protection levels. For example, more sensitive data may require stricter access controls and encryption.

- Data Quality: Ensure high-quality data by implementing data validation and cleansing processes. High-quality data can help in achieving accurate and reliable AI outcomes without compromising security.

- Data Products and Stewardship: Establish roles like data stewards and data product owners to oversee data governance. These roles ensure that data is managed responsibly and in compliance with privacy regulations.

Privacy-Preserving Techniques: Use privacy-preserving techniques such as differential privacy, federated learning, and homomorphic encryption to protect data while enabling AI analytics.

Regulatory and Policy Measures

Governments should also implement regulatory and policy measures to ensure data protection:

Data Protection Laws: Enforce data protection laws and regulations (e.g., GDPR) to mandate the secure handling of sensitive data.

- Transparency and Accountability: Require public sector organisations to be transparent about their data use practices and hold them accountable for any data breaches or misuse.

- Public Awareness and Education: Educate the public about data privacy rights and the measures taken to protect their data, fostering trust in AI-driven initiatives.

Building Public Trust:

To build and maintain public trust in AI-driven systems, governments must adopt a transparent, inclusive, and accountable approach throughout the development and deployment processes. Here are strategies that can help achieve this, focusing on the updated list provided:

Providing Beneficial Tools

Governments should start by optionally providing AI tools that demonstrably improve citizens’ lives. These tools should be user-friendly and address real needs, such as:

- Healthcare: AI-driven health diagnostics and personalised treatment plans.

- Public Services: Efficient AI-enabled public service platforms for tasks like renewing licenses or accessing social services.

Co-Design with Citizens

Involving citizens in the co-design process ensures that AI tools are tailored to meet their needs and concerns:

- Workshops and Focus Groups: Organise workshops and focus groups with diverse community members to gather input and feedback on AI initiatives.

- Surveys and Polls: Use surveys and polls to understand public opinion and preferences regarding AI applications.

Delivering Tangible Results

To build trust, AI-driven systems must deliver tangible and measurable results that enhance efficiency and transparency:

- Case Studies: Showcase successful case studies where AI has improved service delivery or operational efficiency.

- Performance Metrics: Regularly publish performance metrics to demonstrate the effectiveness of AI tools.

Ensuring Accountability

Clear accountability mechanisms are essential for maintaining public trust:

- Accountability Frameworks: Develop and implement frameworks that clearly define the responsibilities of all stakeholders involved in AI projects.

- Independent Oversight: Establish independent bodies to oversee AI deployments and ensure they comply with ethical standards and regulations.

Setting and Monitoring KPIs

Key Performance Indicators (KPIs) should be established to monitor the performance and ethical compliance of AI systems:

- Transparency KPIs: Metrics that measure how transparent the AI system is in its operations and decision-making processes.

- Fairness KPIs: Metrics to assess the fairness and bias in AI outcomes.

Governance and Transparency

Effective governance and transparency are crucial for building trust:

- Open Governance Models: Implement governance models that include public participation and oversight.

- Transparent Reporting: Regularly publish reports detailing AI system operations, data usage, and decision-making processes.

User Acceptance Testing

Involve end-users in the testing phase to ensure the AI tools are user-friendly and meet their expectations:

* Beta Testing: Conduct beta testing with a select group of users to gather feedback and make necessary adjustments.

* Iterative Improvements: Use the feedback from user acceptance testing to iteratively improve the AI systems.

Feedback from Users

Actively seek and incorporate feedback from users to continually enhance AI systems:

- Feedback Channels: Establish multiple channels for users to provide feedback, such as online forms, hotlines, and social media platforms.

- Regular Reviews: Regularly review and analyse user feedback to identify areas for improvement.

Publishing Evaluation Results

Transparency in evaluating AI systems builds trust and demonstrates a commitment to accountability:

- Public Reports: Publish detailed evaluation reports that include performance metrics, user feedback, and any identified issues.

- Open Data: Where possible, provide open access to evaluation data to allow independent verification and analysis.

Effective Communication and Change Management

Effective communication strategies are essential to inform and educate the public about AI initiatives:

- Public Awareness Campaigns: Launch campaigns to educate citizens about the benefits, risks, and safeguards associated with AI.

- Transparent Communication: Maintain open lines of communication to address public concerns and provide updates on AI projects.

Community Engagement

Engaging the community is vital for gaining public support and trust

Future Adaptations:

Emerging trends and technologies in AI have the potential to significantly enhance public sector efficiency and transparency. However, there are lessons to be learnt from previous large scale automation projects undertaken by government.

To proactively adapt and ensure these advancements serve the public interest, governments should focus on the following areas:

AI Futures:

Technologies like Generative AI, NLP, and multi-agent systems can automate complex government processes, improving decision-making, transparency, and efficiency. Governments should focus on investing in research and development, trialling and piloting solutions to stay at the cutting edge of these advancements.

Digital Identity:

AI-enabled biometric systems and blockchain-based decentralized identity are emerging in Austra;oa. Pilot programs need to recognise the increasing role they will play in to test these systems, ensuring they are scalable and effective while balancing privacy concerns.

Cybersecurity:

As AI adoption increases, cybersecurity measures must evolve. AI-driven anomaly detection and autonomous agents can bolster defenses against cyber threats. Governments must invest in standardized processes to ensure the security of sensitive data and systems.

Multi-Agent Systems:

Multi-agent systems can work together autonomously to manage tasks like public services, data analysis, and resource allocation. By testing pilot programs and maintaining human oversight, these systems can significantly reduce administrative burdens.

Updating Legislation:

Continuous updating of AI legislation is essential to address evolving challenges such as algorithmic bias, transparency, and privacy. Governments should engage stakeholders, including technologists, ethicists, and citizens, in the policy-making process to ensure legislation serves public interests.

Public Education:

To build trust in AI-driven systems, governments should prioritize educating the public through transparent communication, highlighting AI’s role in improving services while addressing concerns about privacy and ethics.

Key Areas for Proactive Adaptation:

* Invest in R&D: Stay ahead of AI advancements and foster continuous innovation.

* Pilot Programs: Test new technologies on a small scale to ensure effectiveness before wider deployment.

* Standardize Processes: Ensure consistent AI implementation across sectors to improve interoperability.

* Stakeholder Engagement: Involve citizens, technologists, and ethicists in AI policy and system design.

* Legislative Updates: Regularly refine legal frameworks to address new challenges.

* Cybersecurity: Strengthen AI-driven protections against evolving threats.

* Public Education: Build trust through transparent communication and public engagement.

By focusing on these areas, governments can adapt to AI advancements responsibly, improving efficiency, transparency, and public trust while safeguarding privacy and security.

Extending an implementation plan to include AI project elements

Government AI projects differ from typical agile or waterfall software or IT projects in several key ways, primarily because they rely on data and machine learning models to deliver results, instead of following predefined logic.

In government, many projects follow an agile delivery methodology. For example, in NSW, the service design and delivery process consists of four stages: Discovery, Alpha, Beta, and Live.

In AI projects, this process would be extended to focus more on data-driven activities, such as:

- Data Collection and Cleaning: Gathering and preparing data from multiple sources.

- Data Labeling: Ensuring data is accurately labeled to train models. ( Model Training and Testing: Building, training, and fine-tuning machine learning models.

- Validation: Testing models on unseen data to assess performance.

The project will also need to factor in a longer experimental phase, as developing an AI model often involves adjusting and retraining based on results.

These are additional focus areas for a project plan that includes AI and here are some key distinctions:

Data-Centric:

AI projects require significant focus on data collection, data cleaning, and preparation. This is in contrast to traditional projects, where processes are often rule-based and predefined.

Model-Based:

Instead of following rules, AI systems are trained using machine learning models. These models are trained, tested, and tuned on large datasets to make predictions or decisions.

Iterative and Experimental:

AI projects are more experimental and typically require multiple iterations during model training, testing, and validation. There is often more uncertainty in the outcome, as the success of the model depends on the quality of data and how well it generalizes.

Collaboration Across Teams:

AI projects require closer collaboration between data scientists, domain experts, software engineers, and business stakeholders. Engaging end users (citizens or staff) early on is critical for success, as their feedback helps shape how the AI will be used.

AI Projects in Government

Key Success Factors

For AI projects to succeed, it’s crucial to:

* Ensure co-design and engagement with citizens and agency staff.

* Involve all relevant teams (data scientists, engineers, domain experts).

* Focus on transparency, ensuring that AI is accepted and trusted by end users.

This simplified overview highlights the key differences and requirements for AI projects, with a focus on government implementation.