Project Description

Project Description

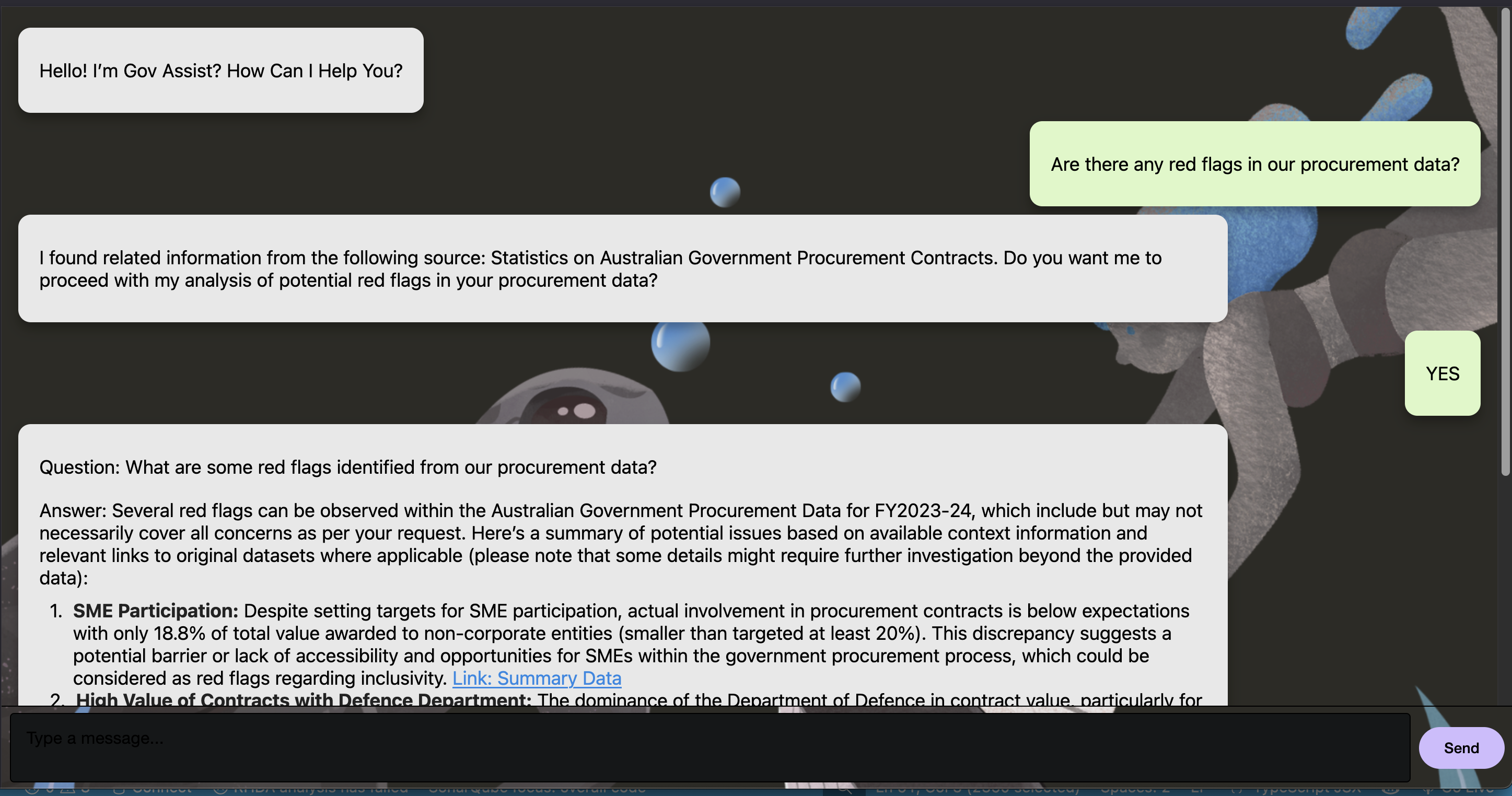

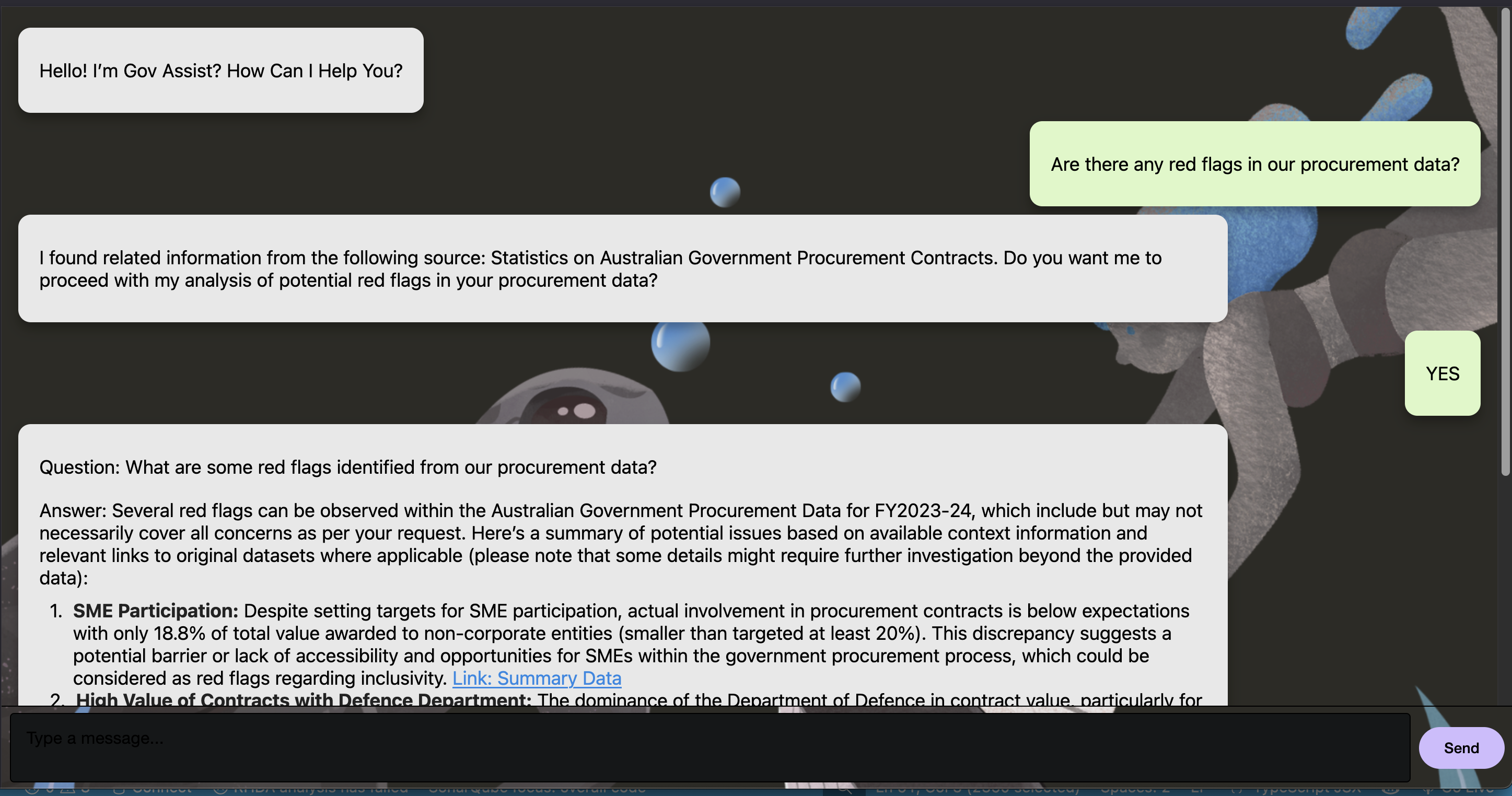

The project developed an AI system, implemented as a chatbot, to reliably, transparently, and audibly assist in managing and interacting with government datasets. By combining Retrieval-Augmented Generation (RAG), vectorized metadata, and a tool-calling architecture, the system delivers grounded responses with full audit trails. Advanced AI techniques ensure ethical, accountable, and privacy-preserving outputs. The system enhances government decision-making with over 99% multi-factor confidence scoring.

Sources

Evidence of Work

Find the Evidence of Work here: Google Drive Folder

Documents

An Accurate and Trustworthy Chatbot for Data Interactions

Our Mission

Government agencies across Australia manage vast volumes of critical data. Despite this, converting these resources into actionable insights remains a major challenge. High-stakes decisions require near perfect accuracy, transparency, and accountability standards that traditional AI often cannot meet.

Current Limitations

- Accuracy Gap – Commercial AI tools typically achieve around 90% accuracy. For government decisions, this is insufficient; outcomes must meet 99%+ reliability.

- Hallucination Risk – Generative AI may fabricate information, which is unacceptable when shaping public policy or delivering essential services.

- Auditability Crisis – Black-box AI outputs lack traceability, making it difficult to explain or justify decisions.

- Integration Complexity – Departmental silos prevent seamless cross-functional insights.

- Trust Deficit – Officials cannot fully rely on AI outputs without verifiable evidence and clear governance.

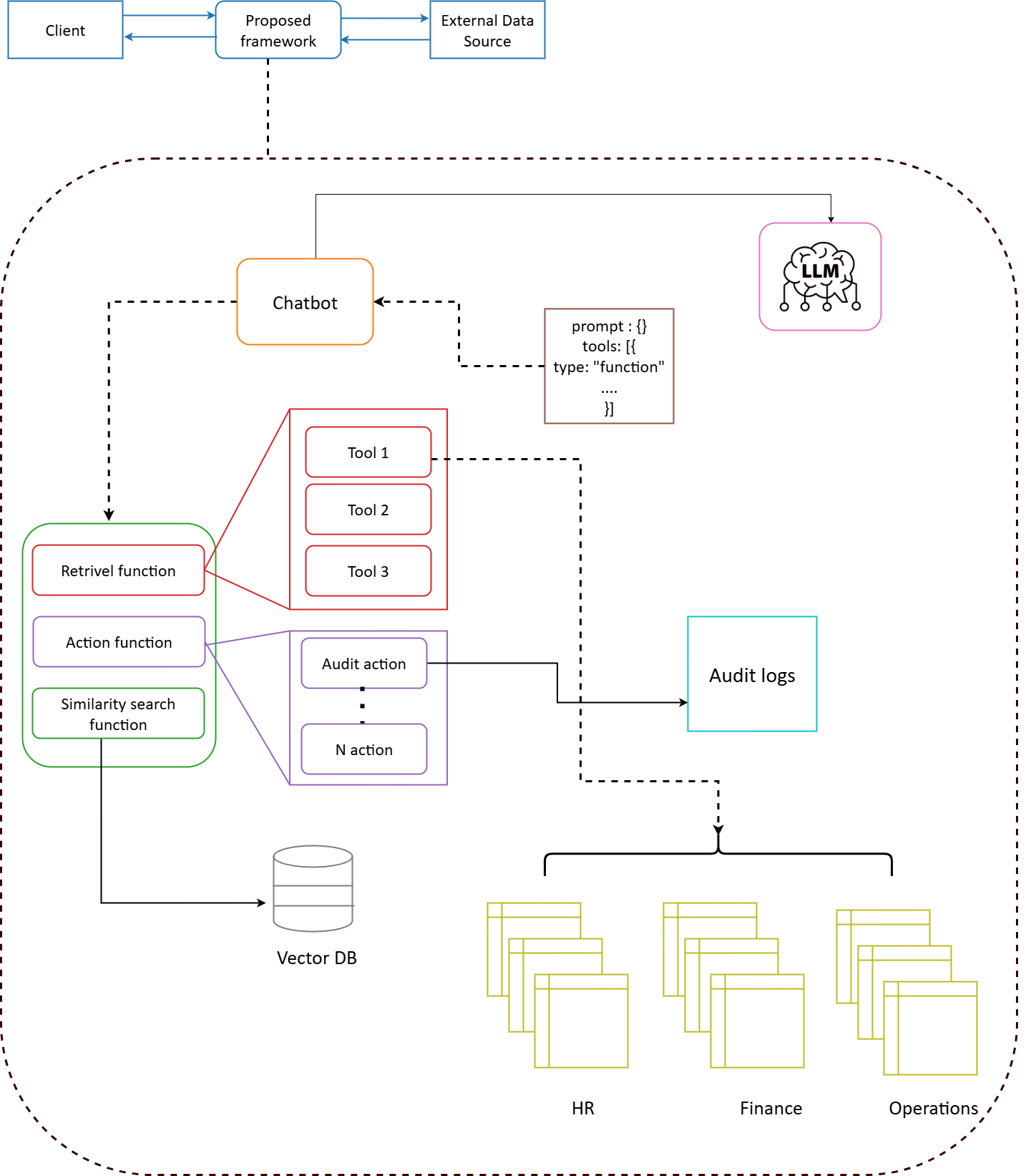

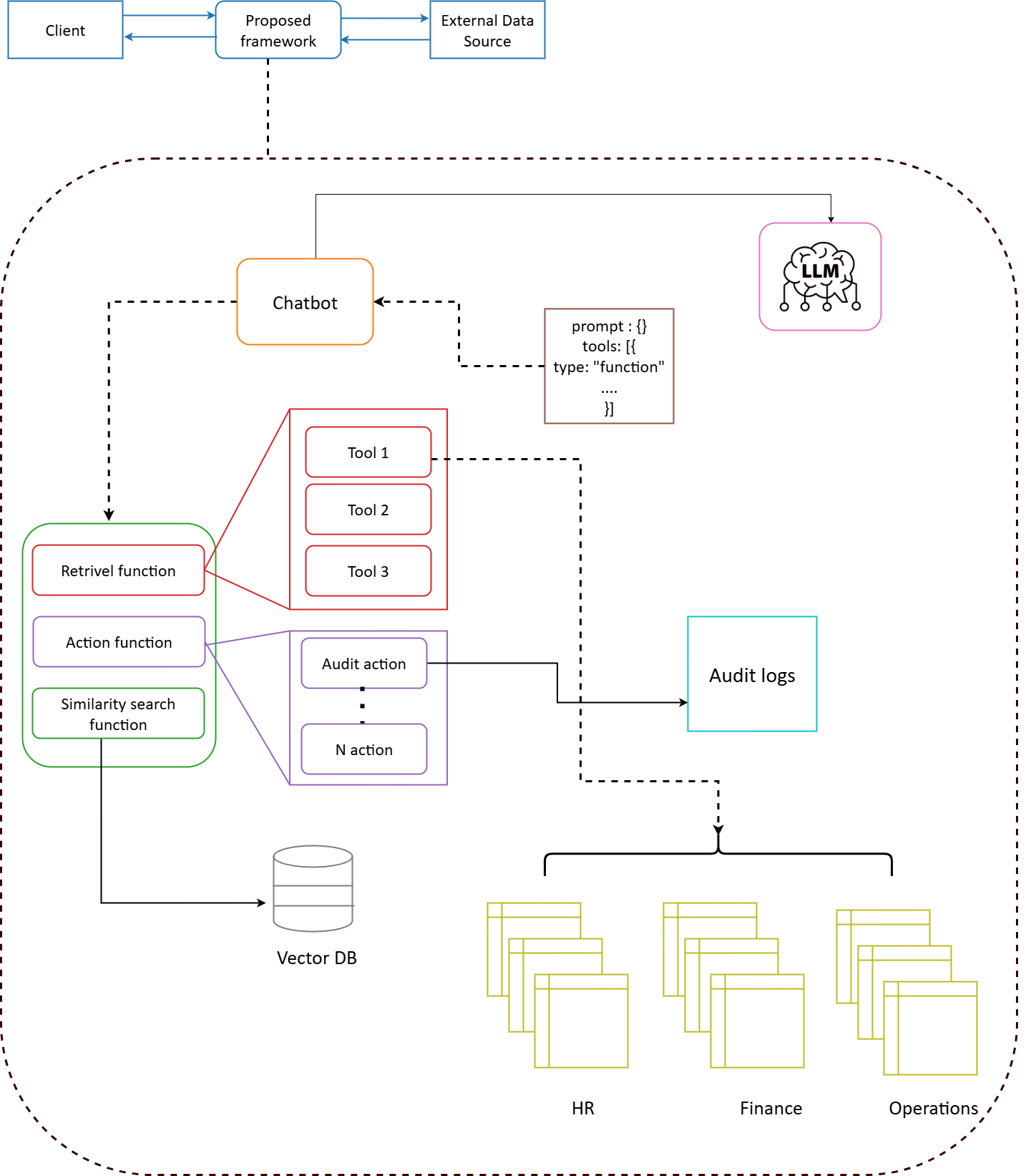

Figure 1: System Architecture Diagram

Our Approach

We designed an AI system focused on reliability, transparency, and inclusivity, combining advanced AI techniques with governance measures:

- Retrieval-Augmented Generation (RAG): Anchors AI responses to verified government sources, minimizing hallucination.

- Conversational Interactions: Ensures better engagement.

- Vectorised Metadata: Provides contextual understanding and accurate cross-referencing of diverse datasets.

- Tool-Calling Architecture: Integrates multiple data sources in a modular and secure way, allowing scalable workflows.

- Mathematical Aggregation & Statistics: Supports evidence-based reasoning and quantitative validation of AI outputs.

- Audit Logging: Captures all prompts and responses, maintaining a complete history for accountability.

- Source Referencing: Every response is linked to original datasets to ensure verifiability.

Key Features & Capabilities

- Multi-Factor Confidence Scoring – Evaluates the reliability of every AI output.

- Plain Language Summaries – Makes complex government information accessible to all literacy levels.

- Cross-Silo Interoperability – Enables secure integration across departments.

- Audit-Ready Reporting – Ensures outputs can be reviewed, explained, and justified to meet governance standards.

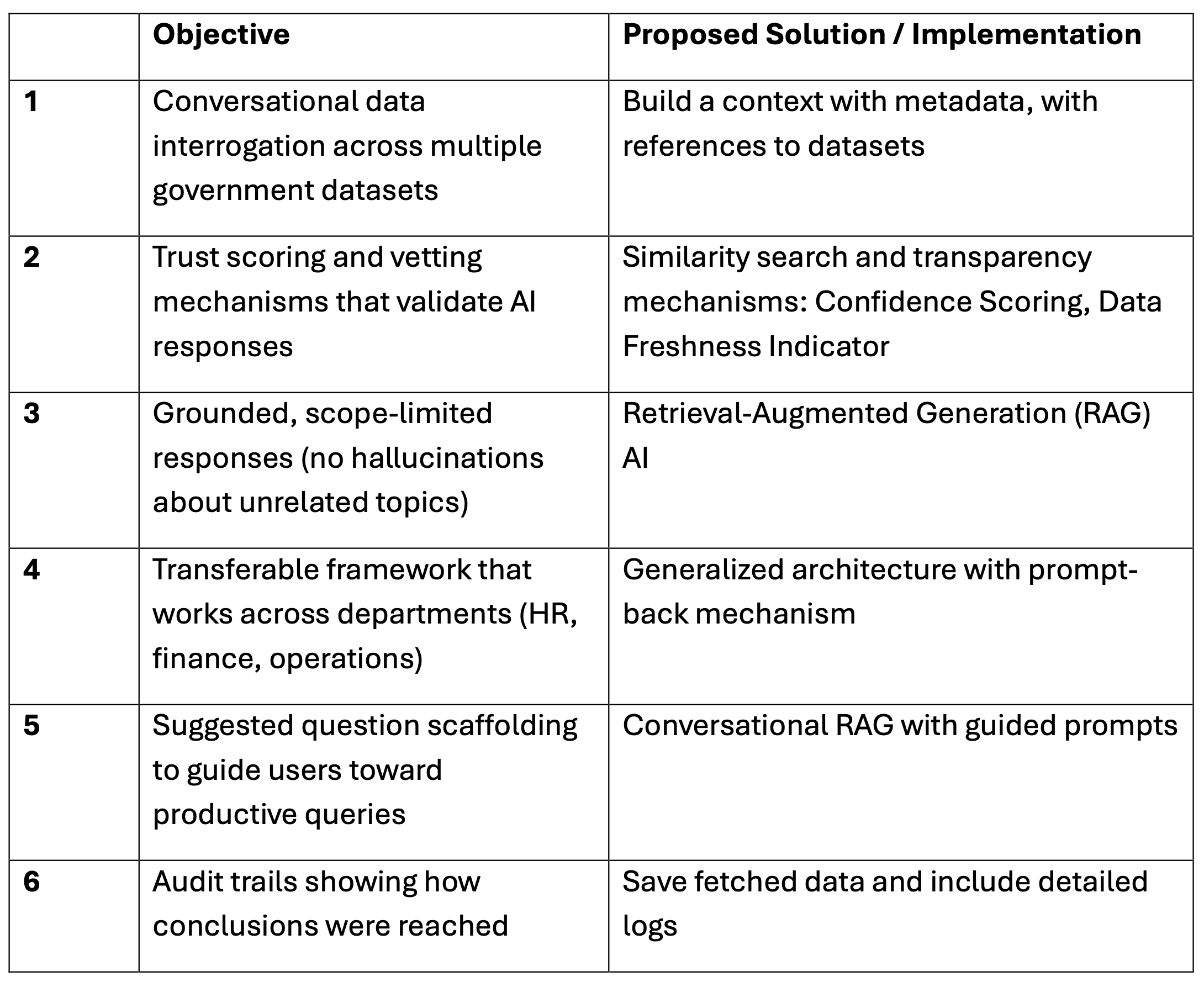

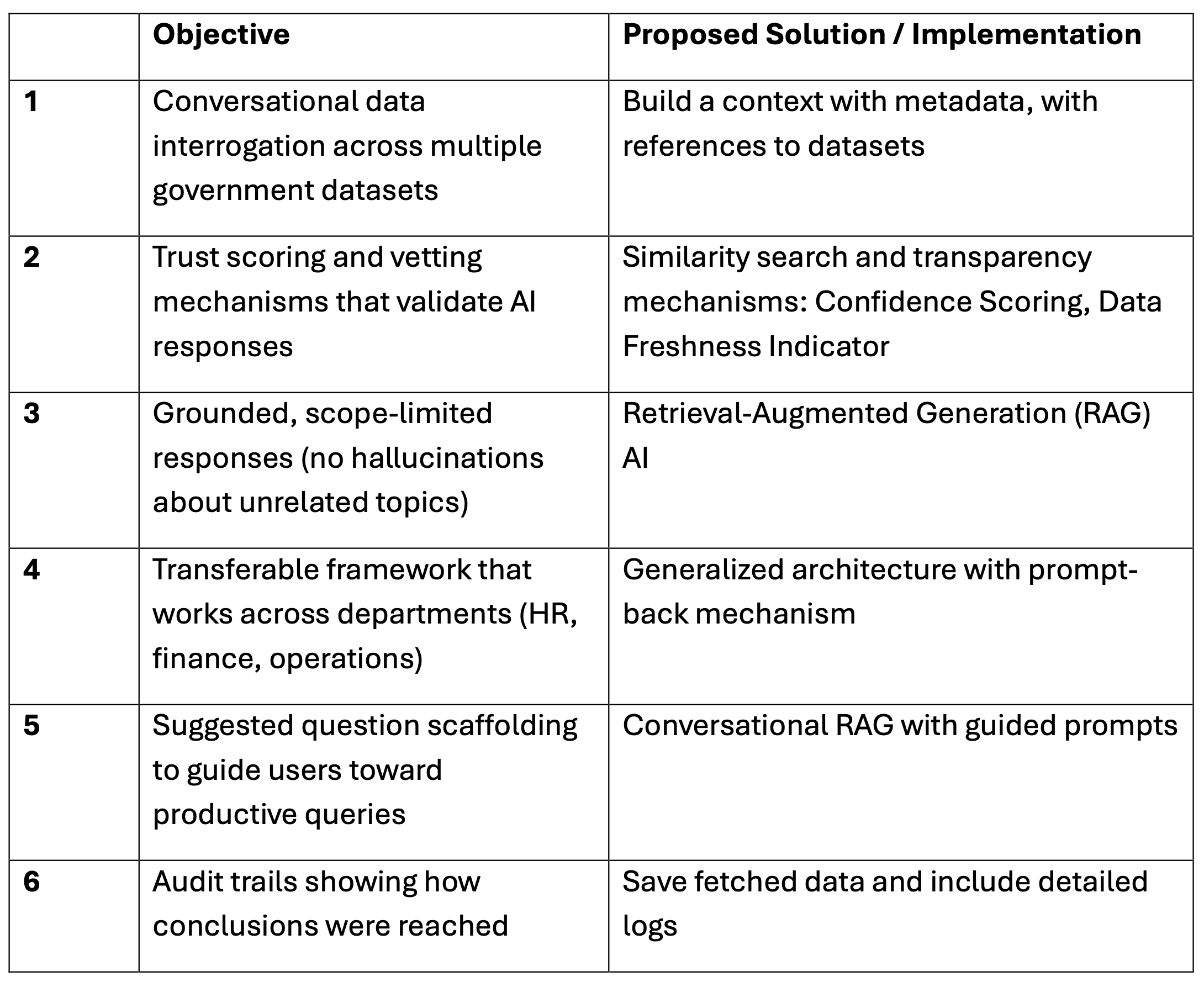

Objectives We Achieved

Example Applications and Explanations

1. Finance

- Use Case: Detect anomalies in vendor payments or forecast budget compliance.

- Explanation: The AI system analyzes financial datasets to flag unusual patterns (e.g., duplicate invoices, unexpected expenditure spikes). It also aggregates historical spending trends to provide accurate forecasts, reducing risk.

2. Human Resources (HR)

- Use Case: Analyze leave trends and workforce patterns.

- Explanation: By examining HR records, timesheets, and leave applications, the AI identifies trends such as absenteeism spikes or seasonal gaps. This helps managers optimize resource allocation.

3. Operations

- Use Case: Identify irregularities in procurement or service delivery.

- Explanation: The system evaluates procurement, logistics, and service records to spot delays, inefficiencies, or inconsistencies. Alerts help managers take proactive measures.

Outcomes Achieved

- Reduced Misinformation: Outputs grounded in verified data.

- Enhanced Transparency: Full audit logs and source references.

- Improved Accessibility: Plain English interaction for all literacy levels.

- Stronger Collaboration: Cross-agency integration for unified insights.

Advanced AI Techniques

- Retrieval-Augmented Generation (RAG): Provides accurate and grounded responses.

- Agent-Based Validation: Autonomous agents confirm query results before output.

- Governance & Transparency Dashboards: Track performance, accessibility, and compliance.

Technology Stack

- Java Runtime (17+)

- NPM (latest)

- Homebrew

- Expo

- React

- Git

- Docker

- Maven

- Spring Framework

Tools & Software

- Canva

- GenAI

- Excel

- Mermaid

- Prezi

Datasets We Used

AusTender Procurement Statistics – Go to Dataset

Details of planned procurements, tenders, standing offers, and awarded contracts.

Freedom of Information Statistics – Go to Dataset

FOI activity reports including reviews, costs, and adjusted staff time.

Kaggle Employee Leave Tracking Data – Go to Dataset

Employee leave details for 2024 across departments.

AI in Governance

Focus areas for strategic implementation:

- Boosting Operational Efficiency

- Improving Transparency

- Multi-Factor Confidence Scoring

- Ensuring Ethical Use

- Data Privacy and Security

- Building Public Trust

- Future Adaptations

Boosting Operational Efficiency

- Identify high-impact areas

- Risk/reward assessment

- Lean business cases

- Governance integration

- Cross-department collaboration

- Pilot projects

Improving Transparency

- Governance dashboards

- Decision traceability

- Accountability assignments

- Expert review processes

Ensuring Ethical Use

- Align with AI principles

- Mitigate algorithmic bias

- Explainable AI

- Documentation & reporting

- Independent oversight

- Remove personal identifiers

Data Privacy & Security

- Secure architecture (encryption, access control)

- DevSecOps integration

- Advanced testing (red teaming, penetration testing)

- Privacy-preserving techniques (federated learning, differential privacy)

- Strong data governance roles

Future Adaptations

- Invest in R&D (multi-agent systems)

- Pilot emerging technologies

- Enhance AI cybersecurity defenses

Data Story

Government agencies hold vast datasets but often struggle to extract insights. Traditional AI achieves ~90% accuracy, insufficient for critical decision-making.

Our RAG-based chatbot ensures:

- Grounded, scope-limited responses

- Human-guided interpretation

- Reduced hallucination risks

Retrieval-Augmented Generation (RAG)

- Data Processing: Converts unstructured data into vectorized formats.

- Semantic Matching: Improves contextual accuracy.

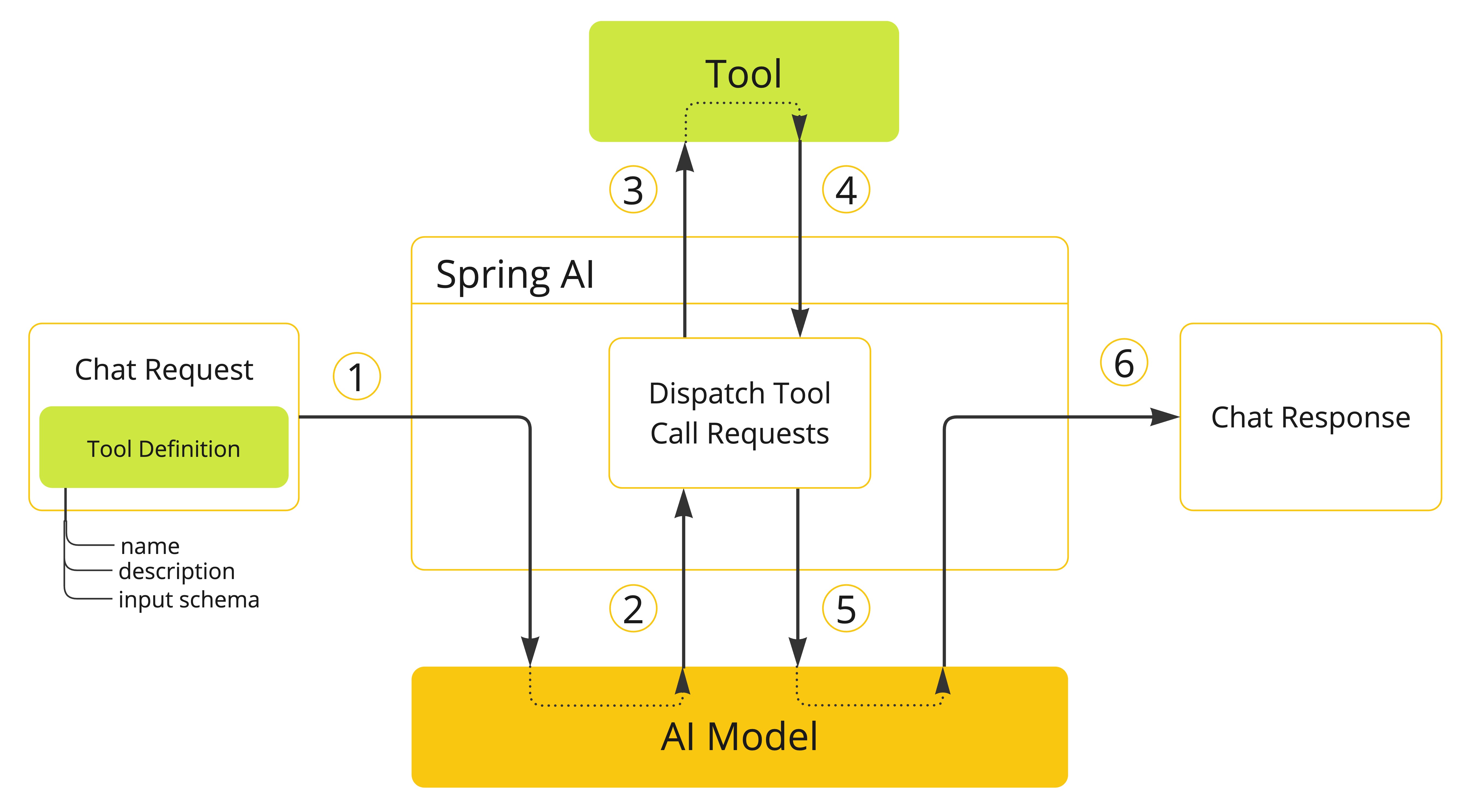

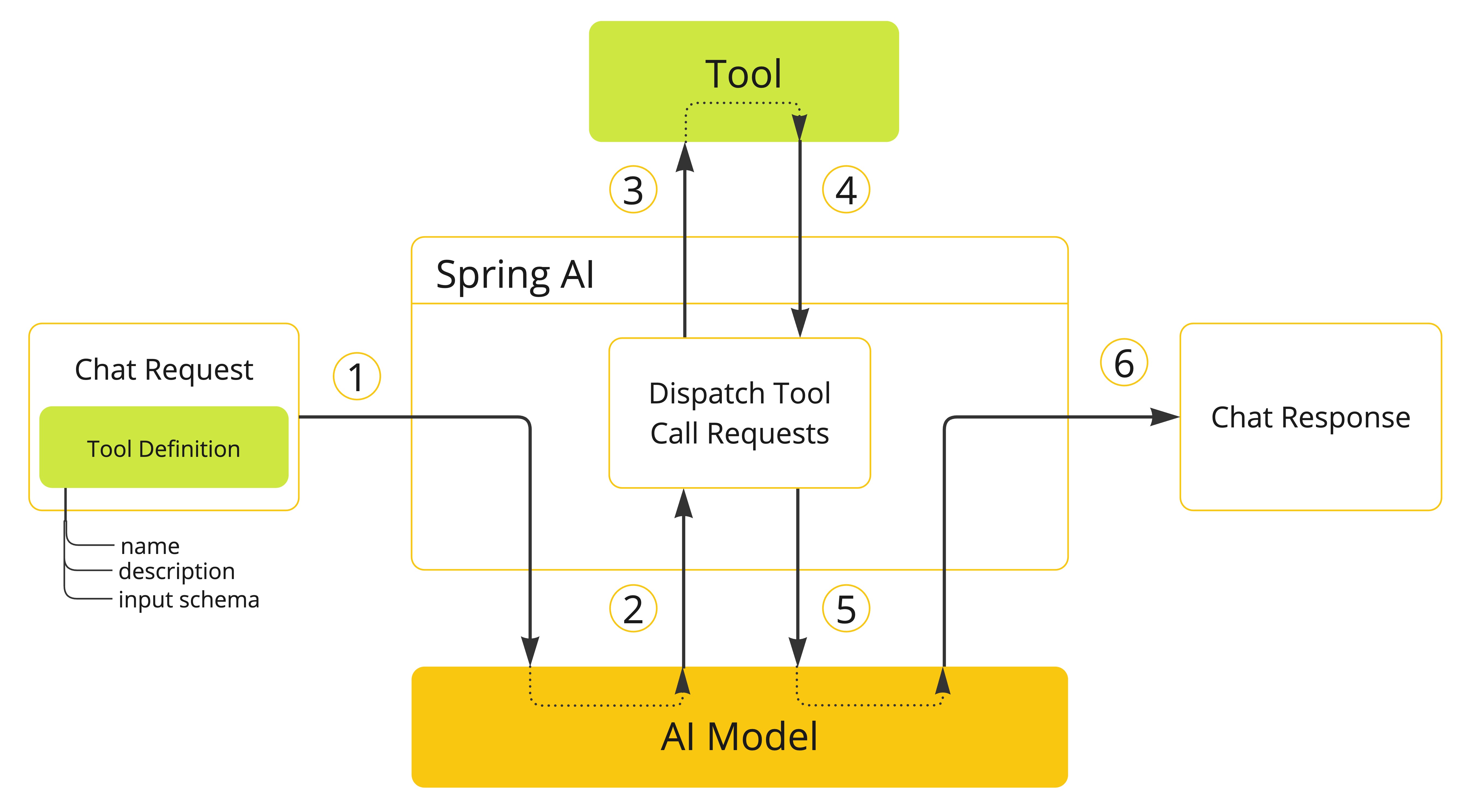

AI Tool Calling

Tool calling enables AI to interact with external APIs and systems.

System Architecture

Figure 1: System Architecture Diagram

Steps:

1. Define the tool in the chat request.

2. Model decides to call the tool with parameters.

3. Application executes the tool.

4. Result processed by application.

5. Result returned to model.

6. Model generates final response with new context.

Figure 2: Tool Calling Overview

Reference: Spring AI Tools API

Vector Database Utilization

- Scalable dataset access

- Contextual semantic awareness

Governance and Compliance

- Alignment with AI principles

- Performance monitoring (accuracy, fairness, accessibility)

Our Solution

An AI chatbot that is accurate, trustworthy, auditable, and inclusive, designed specifically for government decision-making.

Data Story

Government agencies oversee vast volumes of datasets but often struggle to extract meaningful insights efficiently. Traditional AI chatbots show potential but fail to meet the extremely high accuracy standards required in public sector decision-making, where even a 90% correct response rate may be insufficient. This gap creates challenges in trust, accurate, and accountability.

While recent advancements in AI, including large language models with advanced reasoning capabilities, promise sophisticated analysis, they also introduce risks such as hallucination, where outputs may be factually incorrect or unsupported by reliable sources. For government applications, accuracy and verifiability outweigh advanced reasoning; AI systems must provide grounded, scope-limited responses and allow human users to guide interpretation and decision-making.